As organizations scale, the hardest part of execution is not setting direction but maintaining visibility into whether the business is actually holding together as change unfolds.

That loss of visibility almost always traces back to cadence design: KPIs and OKRs are reviewed together, with the same expectations, in the same forums, despite serving fundamentally different decision needs.

KPIs describe the current condition of the system. OKRs are designed to deliberately change that system. When both are reviewed on the same rhythm, KPIs become background context rather than real constraints, and OKRs are judged prematurely, long before meaningful change can realistically appear.

The result is steady activity and apparent alignment, paired with a shrinking window to intervene before outcomes are already locked in.

The practical question is not whether KPIs or OKRs matter more, but when each should be reviewed in order to preserve decision leverage.

The Functional Difference That Determines Review Cadence

Cadence design only works when the functional separation between KPIs and OKRs is explicit and enforced.

- KPIs describe whether the business is operating within healthy limits, including capacity, reliability, efficiency, and customer experience.

- OKRs describe whether a focused set of bets is producing meaningful improvement relative to a specific objective.

Because business health can deteriorate gradually and compound invisibly, KPIs require frequent observation. Because meaningful change takes time to materialize and validate,

OKRs require fewer but more deliberate checkpoints. Reviewing both on the same cadence collapses this distinction and creates a false sense of control, where teams feel informed but are actually reacting too late.

In practice, this collapse shows up when teams spend time explaining why KPIs moved after the fact, or defending OKR progress without acknowledging that underlying constraints have already shifted.

Weekly Reviews: Detect Health Drift Before It Becomes a Constraint

Weekly reviews should be designed to preserve optionality by surfacing risk early, not to evaluate performance or assign blame.

At a weekly cadence, KPIs should be reviewed as indicators of whether execution is occurring inside safe operating boundaries.

The intent is not deep analysis, but early detection. Leaders should leave weekly reviews with a clear sense of whether the system remains capable of supporting the current level of ambition.

Weekly KPI reviews should focus on:

- Whether current values remain within expected operating ranges given execution intensity.

- Whether short-term trends suggest emerging strain, saturation, or degradation.

- Whether any metric is moving in a way that would materially constrain active OKRs if left unaddressed.

OKRs should appear in weekly reviews only as a dependency check. Their purpose is to test whether assumptions behind the objective still hold given current health signals.

Weekly scoring, progress percentages, or detailed activity narratives tend to shift the conversation toward justification rather than intervention, which defeats the purpose of early review.

A useful test is simple: if the weekly discussion ends without identifying at least one potential adjustment or risk worth monitoring, the meeting is likely focused on reporting rather than sensing.

Monthly Reviews: Translate Signal into Steering Decisions

Monthly reviews exist to convert accumulated signal into course correction while meaningful options still exist.

KPIs should be reviewed monthly to understand whether observed movements represent noise, recovery, or structural change. Looking across several weeks allows teams to distinguish between temporary fluctuations and sustained trends that demand action.

At this cadence, commentary becomes essential, because numbers alone rarely explain why performance is changing.

Monthly KPI reviews should examine:

- Whether trends are stabilizing, improving, or deteriorating over time.

- Whether earlier interventions are having the intended effect.

- Whether constraints are tightening in ways that require tradeoffs across teams.

OKRs should be reviewed monthly for trajectory rather than completion. This is the point where leadership still has leverage to adjust scope, sequencing, or resourcing without invalidating the entire cycle.

Monthly OKR reviews should therefore focus on whether effort is compounding toward the objective or dissipating across initiatives that feel productive but do not materially change outcomes.

When monthly reviews are skipped or treated casually, teams lose the last realistic opportunity to steer before the quarter hardens.

Quarterly Reviews: Evaluate Change, Not Operational Health

Quarterly reviews are the only point at which OKRs should be formally evaluated, because meaningful change cannot be judged reliably on shorter intervals.

OKRs should be reviewed quarterly to determine whether the objective produced real improvement, what assumptions proved incorrect, and how learning should shape the next cycle. This is a learning and direction-setting exercise, not a performance defense or retrospective justification.

KPIs, by contrast, should not be scored quarterly. They should be assessed for continued relevance.

As the business evolves, the metrics that best represent health will also change, and quarterly reviews provide the appropriate moment to refine definitions, adjust thresholds, or retire KPIs that no longer reflect operational reality.

Quarterly KPI discussions should focus on:

- Whether each KPI still represents a meaningful constraint or enabler of execution.

- Whether targets and definitions remain appropriate given current scale.

- Whether new KPIs are needed to reflect emerging risks.

Treating KPIs as static, quarter-scored artifacts freezes the operating model and disconnects metrics from reality.

Tracking KPIs and OKRs on Different Cadences (In Practice)

A clear separation between KPIs and OKRs only works if the system supports different review rhythms without friction.

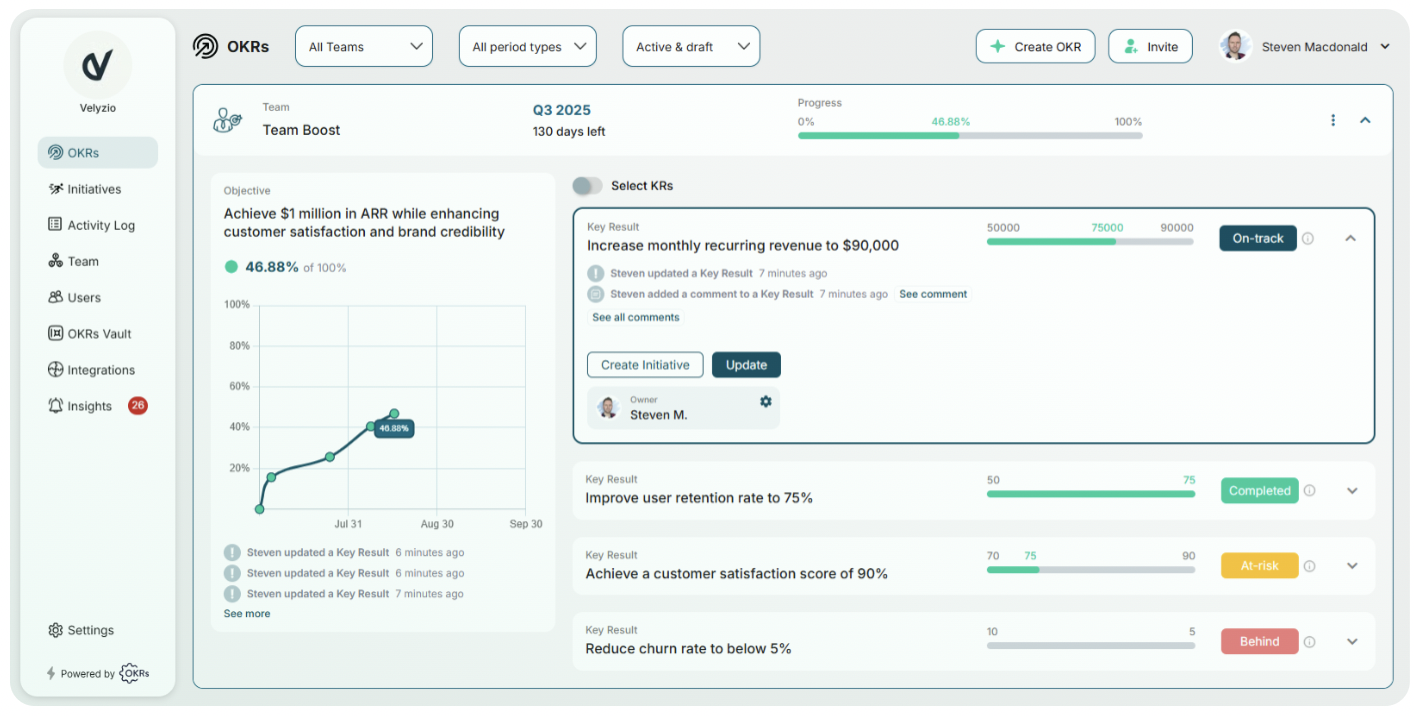

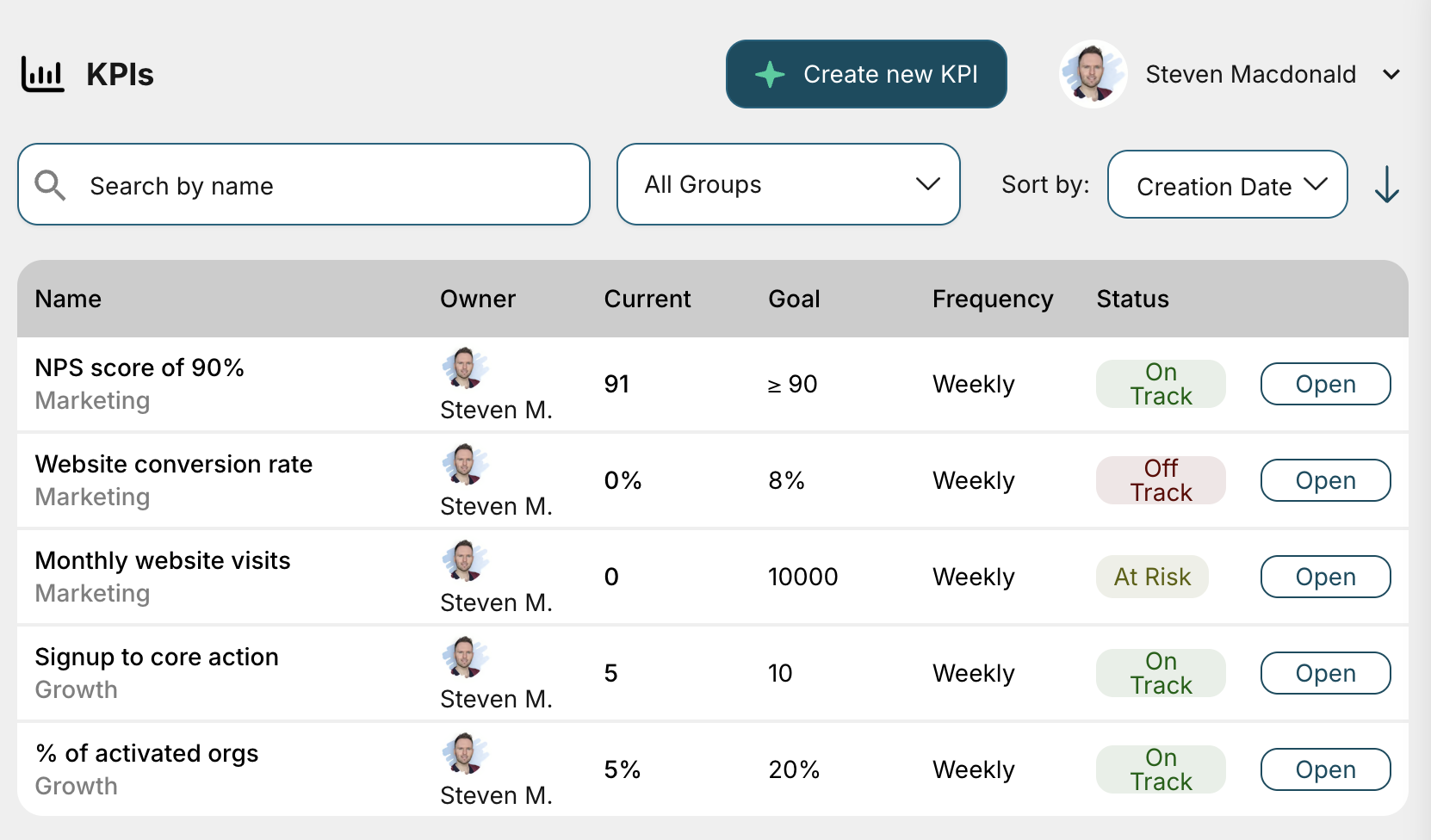

In OKRs Tool, KPIs and OKRs are tracked independently, so teams can monitor business health continuously while managing change on longer horizons.

KPIs are designed to reflect ongoing business conditions, which is why they can be reviewed and updated on a weekly or monthly cadence. This allows teams to maintain visibility into stability, capacity, and risk as execution unfolds, without forcing those signals into goal progress discussions.

OKRs, by contrast, are tracked on monthly, quarterly, or annual cycles, with the option to set custom date ranges where needed. This makes it possible to evaluate progress toward change at intervals that align with how outcomes actually materialize, rather than reacting to noise week by week.

In practice, this separation enables teams to:

- Review KPIs frequently to detect early drift, strain, or emerging constraints.

- Review OKRs at deliberate checkpoints to assess trajectory and adjust inputs.

- Avoid scoring OKRs based on short-term KPI fluctuations.

- Keep health monitoring and outcome evaluation connected, but not conflated.

Because both KPIs and OKRs live in the same system, teams can see them side by side during reviews without forcing them into the same cadence or decision framework.

KPIs provide context for OKRs, and OKRs provide direction for where change is expected, while each retains its own rhythm and purpose.

This structure reinforces the operating model described throughout this article: KPIs protect the system, OKRs apply pressure to improve it, and cadence determines whether teams can act while decisions still matter.

Review Cadence, by Signal Type

When KPIs and OKRs are reviewed on the cadence that matches their purpose, teams gain earlier signal, clearer decisions, and far more control over outcomes.

This expanded view makes it explicit that cadence is not just about timing, but about ownership, decision rights, and the type of judgment each review is meant to support.

Why Cadence Discipline Is the Difference Between Planning and Operating

When KPIs and OKRs are reviewed on the cadence that matches their purpose, teams regain execution leverage. KPIs provide early visibility into whether the system can sustain execution. OKRs apply pressure to change what matters most.

Together, they allow leadership to make informed tradeoffs before outcomes are inevitable.

When cadence is wrong, OKRs become planning rituals and KPIs become historical artifacts. When cadence is right, the system surfaces reality early enough to change decisions, not just explain results.

This discipline is what turns OKRs from a quarterly exercise into an operating system that supports sustained execution as complexity increases.